Beyond single-vector threats: perceptual and decisional joint attacks in multi-agent deep reinforcement learning

- Volume

- CitationGuo W, Liu G, Zhou Z. Beyond single-vector threats: perceptual and decisional joint attacks in multi-agent deep reinforcement learning. Artif. Intell. Auton. Syst. 2026(1):0001, https://doi.org/10.55092/aias20260001.

- DOI10.55092/aias20260001

- CopyrightCopyright2026 by the authors. Published by ELSP.

- Special Issue

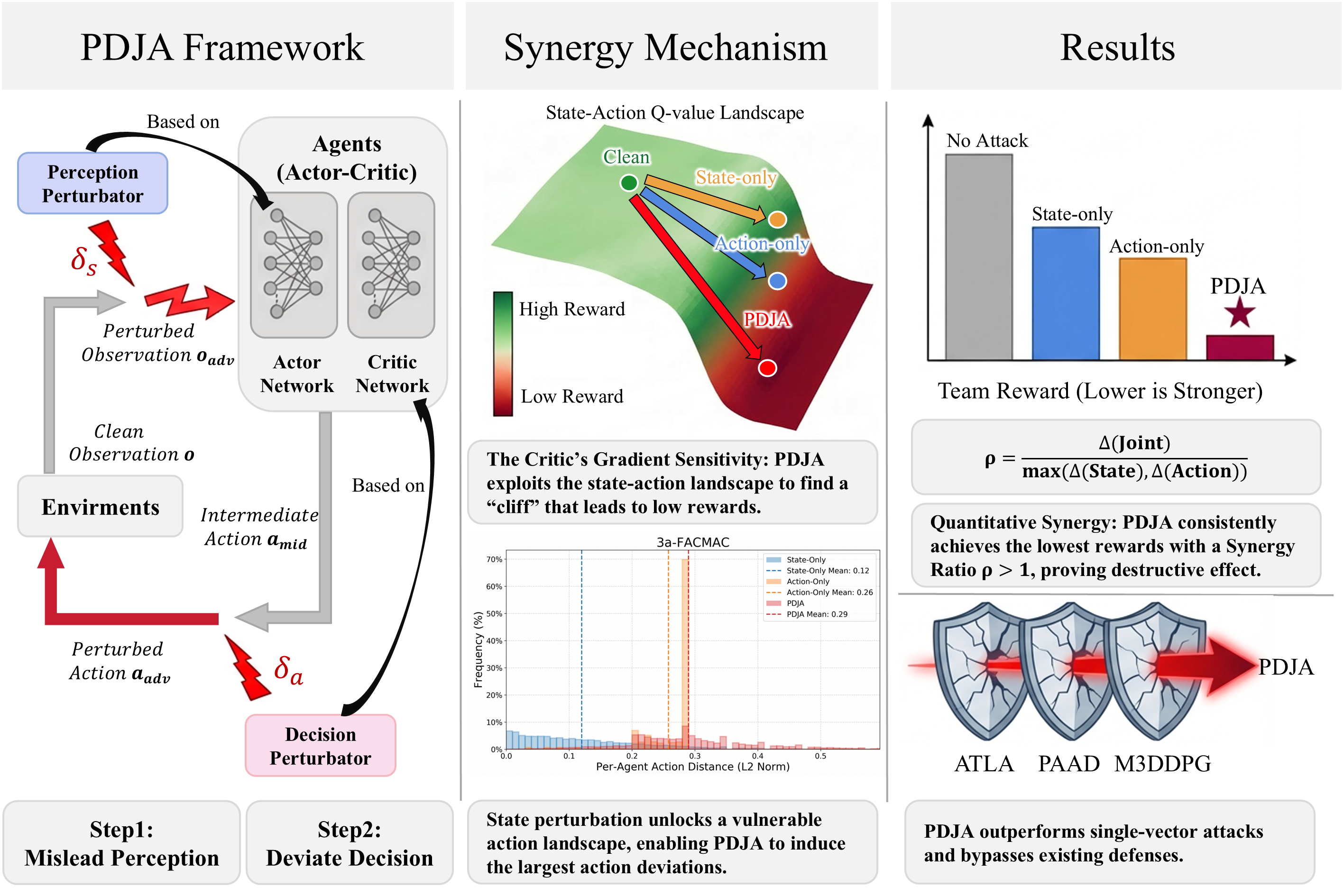

Multi-agent deep reinforcement learning (MADRL) has shown potential in solving complex cooperation and competition tasks among agents. Algorithms based on the Actor-Critic architecture have demonstrated excellent performance in continuous spaces. However, the robustness of such algorithms against adversarial attacks has not been thoroughly investigated. Existing studies primarily focus on either Perceptual attacks or Decisional attacks in isolation, without considering the impact of combining these two approaches. In this paper, we propose the Perceptual-Decisional Joint Attack (PDJA) framework that induces a strong synergistic disruption in MADRL systems. The framework executes a sequential, two-stage attack: (1) First, it perturbs the agent’s perception by utilizing the actor’s gradients. (2) Then, based on the agent’s reaction to this perturbed state, it attacks the agent’s decision by using the critic’s Q-value to apply a final perturbation directly to the output action. We evaluate our attack framework in the Multi-Agent Particle Environment (MPE) and the Multi-Agent MuJoCo (MAMuJoCo), demonstrating the synergistic effect of disrupting agent perception and decision-making, and the limitations of current defense strategies in handling this joint attacks.

multi-agent deep reinforcement learning; adversarial attack; adversarial robustness; perception-decision joint attack

X

X Facebook

Facebook LinkedIn

LinkedIn Reddit

Reddit Bluesky

Bluesky