InstaDrive: street view generation based on the unified instance segmentation input of vehicles and map elements

- Volume

- CitationWang Q, Wang Y, Wang H. InstaDrive: street view generation based on the unified instance segmentation input of vehicles and map elements. Robot Learn. 2026(1):0004, https://doi.org/10.55092/rl20260004.

- DOI10.55092/rl20260004

- CopyrightCopyright2026 by the authors. Published by ELSP.

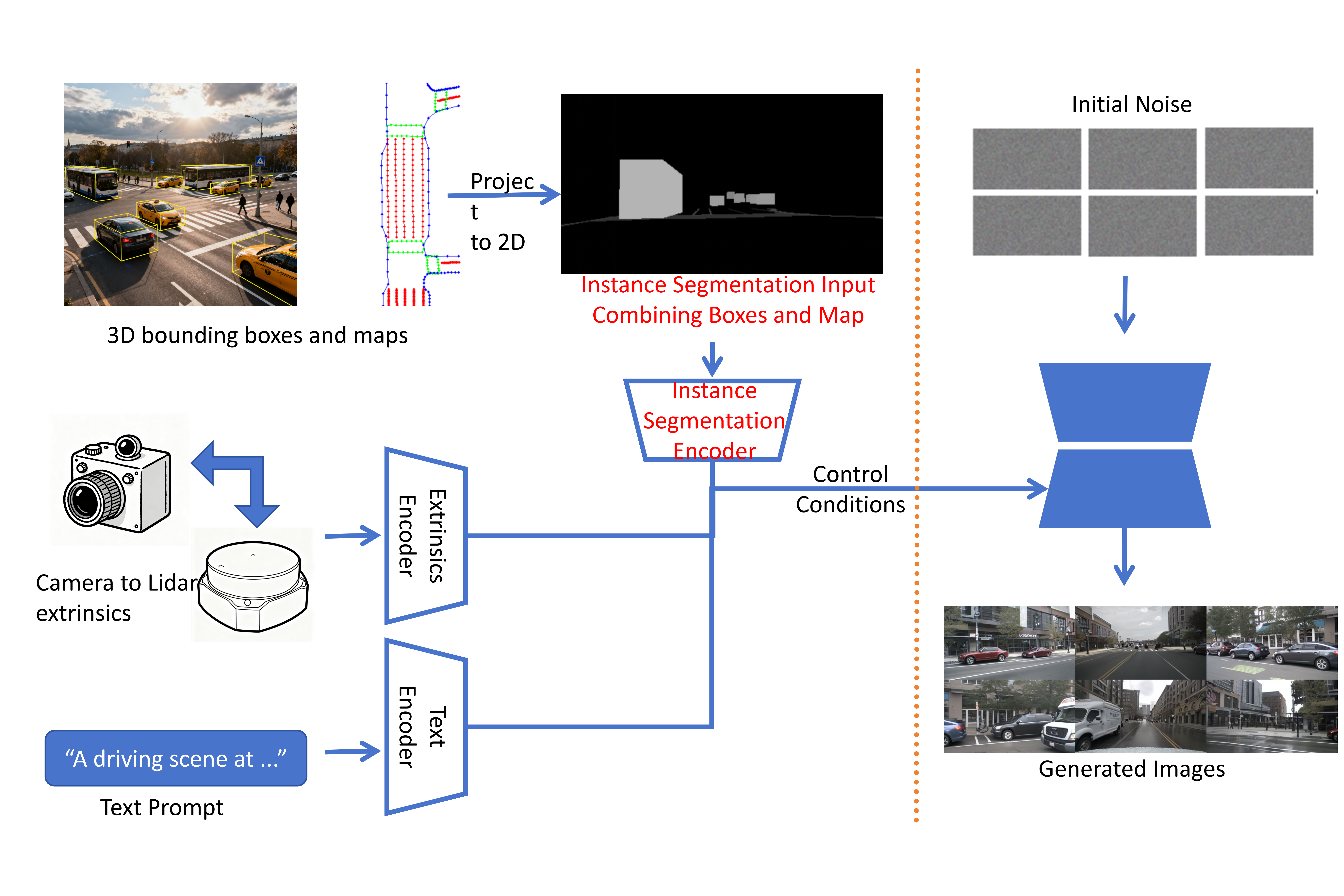

Aiming at the problems of cumbersome manual annotation and long-tail distribution of data in the training of autonomous driving detection models, this paper proposes the InstaDrive method. This method takes 3D bounding boxes of vehicles, vectorized annotations of map elements from the perspective of BEV, external parameters of the camera and text prompt as inputs, and encodes them as control conditions—generating 2D instance segmented images through projection Then control Stable Diffusion to generate a panoramic camera image of the autonomous driving scene. This method can efficiently generate a large amount of labeled training data and specifically edit scene elements to build corner Cases. To address the consistency issue in multi-view image generation, InstaDrive ensures multi-view consistency from the input layer and uniformly expresses the 2D view occlusion relationship. Experiments on the nuScenes dataset show that this method has achieved excellent results in the task of editing the positions of vehicles and map elements.

driving scene generation; instance segmentation; Stable Diffusion; ControlNet

X

X Facebook

Facebook LinkedIn

LinkedIn Reddit

Reddit Bluesky

Bluesky